Research

Controllable Lesion Data Synthesis

Scaling law is widely deemed as the cornerstone towards medical foundation models. However, the collection of sufficient training data, particularly those related to lesions, may remain an unattainable objective in clinical practice. We utilize generative models to disentangle lesion attributes and create diverse annotated lesion data in a controllable manner. This not only facilitates the training of medical AI but also serves as a robustness assessment benchmark, and potentially acts as a medical education tool.

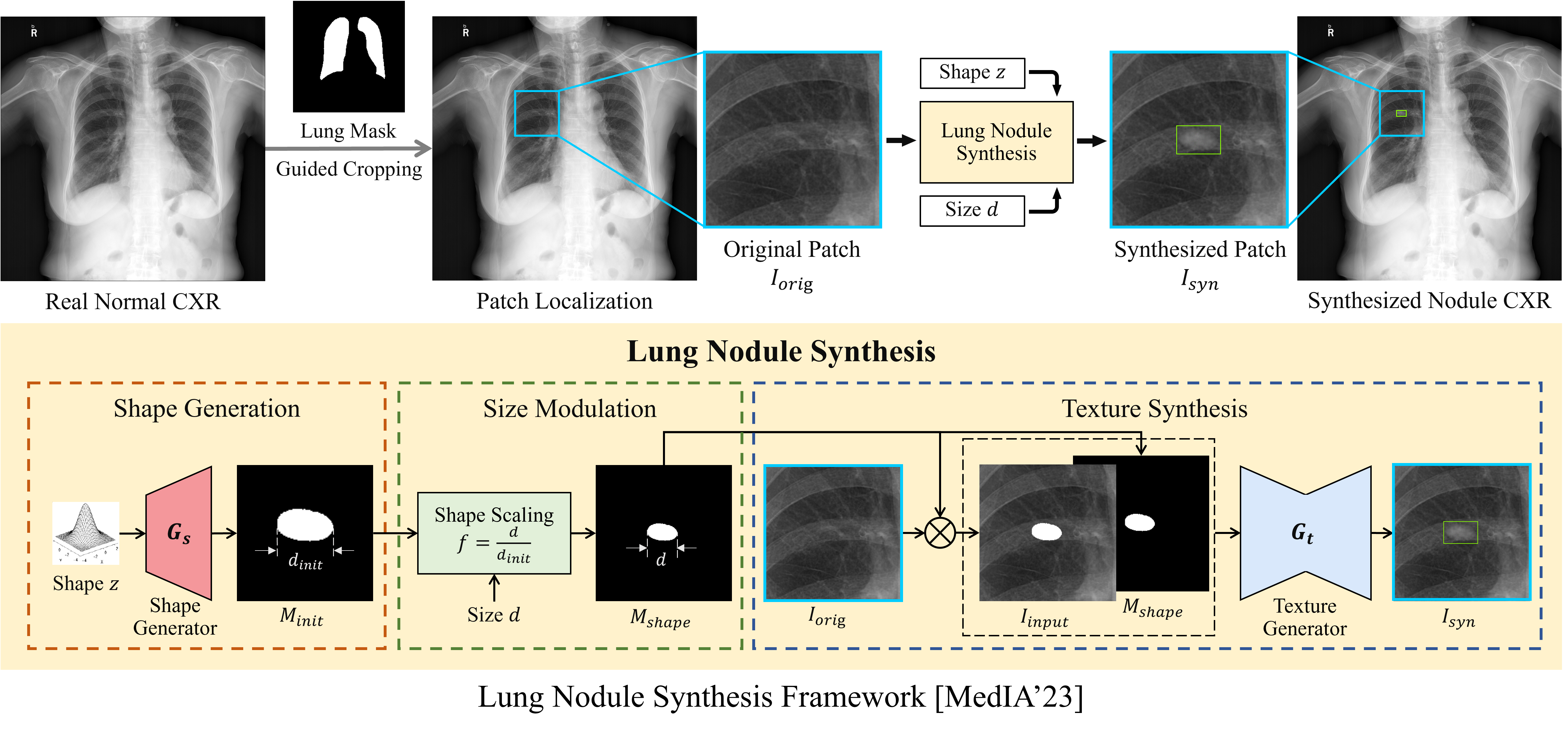

Chest X-ray Lung Nodule Synthesis for Lung Nodule Detection

- We proposed a lung nodule synthesis framework that disentangles nodule attributes (i.e., shape, size, and texture) and synthesize nodules in a controllable manner. We leveraged the controllability of the framework to design a hard example mining strategy for data augmentation on lung nodule detection.

Relevant Publications:

- Image Synthesis with Disentangled Attributes for Chest X-ray Nodule Augmentation and Detection [MedIA’23]

- Nodule Synthesis and Selection for Augmenting Chest X-ray Nodule Detection [PRCV’21]

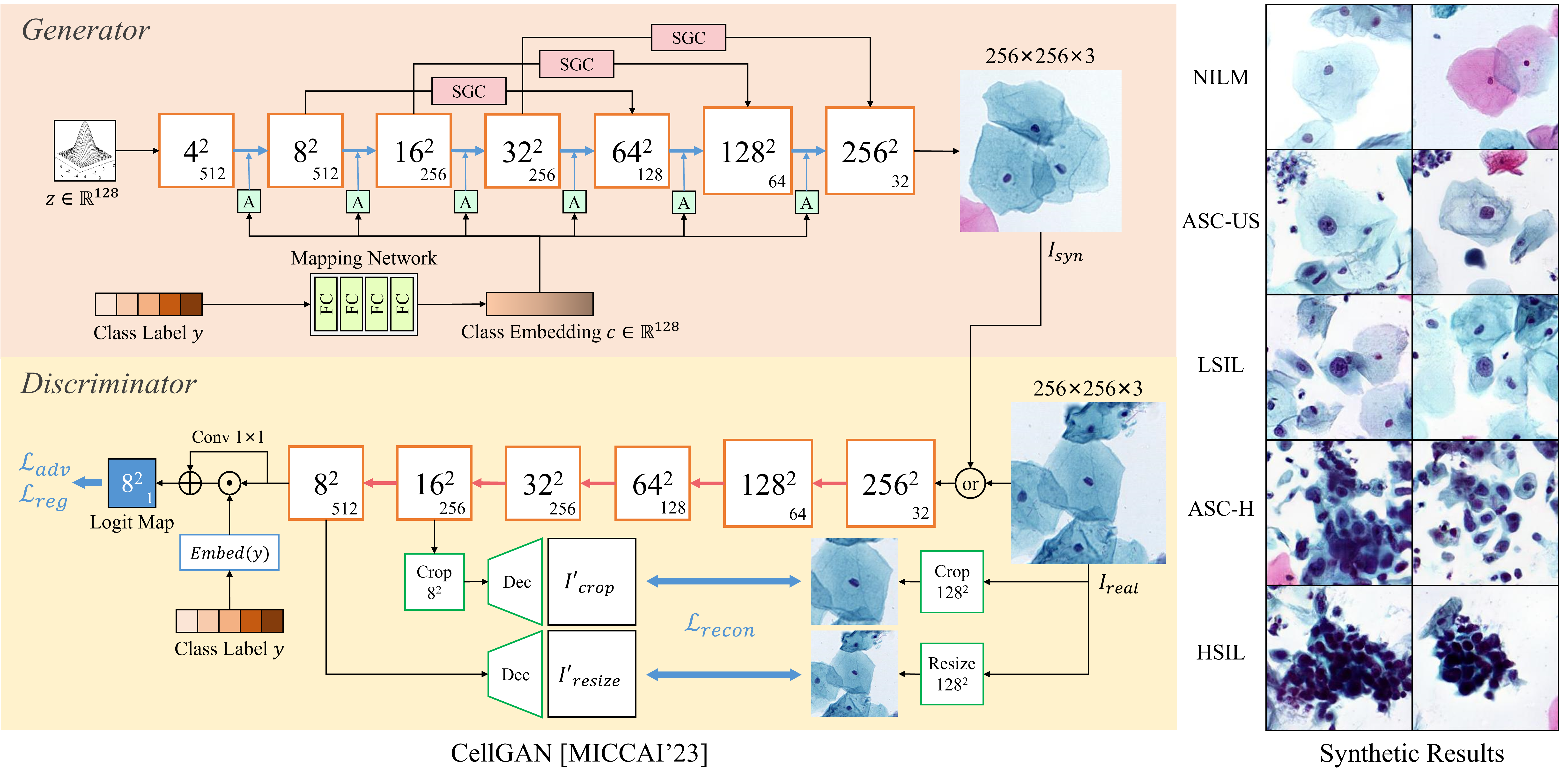

Cervical Cytological Image Synthesis for Cervical Abnormality Screening

- We proposed CellGAN, a class-conditional GAN, to synthesize cervical cytological image patches of various cervical cell types, aimed at enhancing patch-level cervical cell classification.

- We incorporated CellGAN into a knowledge distillation framework for multi-class abnormal cervical cell detection, which facilitates the class-balance pre-training of a teacher network.

- We proposed a two-stage diffusion-based framework that hierarchically generates global cervical cytological image and local abnormal cervical cells for augmenting image-level abnormal cervical cell detection.

Relevant Publications:

- CellGAN: Conditional Cervical Cell Synthesis for Augmenting Cytopathological Image Classification [MICCAI’23 Early Accept]

- Distillation of Multi-class Cervical Lesion Cell Detection via Synthesis-aided Pre-training and Patch-level Feature Alignment [Neural Networks’24]

- Two-stage Cytopathological Image Synthesis for Augmenting Cervical Abnormality Screening

Cross-Modality Medical Image Synthesis

Multi-modal medical imaging information is the cornerstone of precision medicine, yet a common challenge is the limited availability of certain imaging modalities in clinical practice. Cross-modality image synthesis can impute target modality images from source modality images, which serves as a beneficial tool in multi-modal studies. The correlation established between different modalities can be also leveraged for other clinical and research purposes, such as anomaly detection and PET attenuation correction.

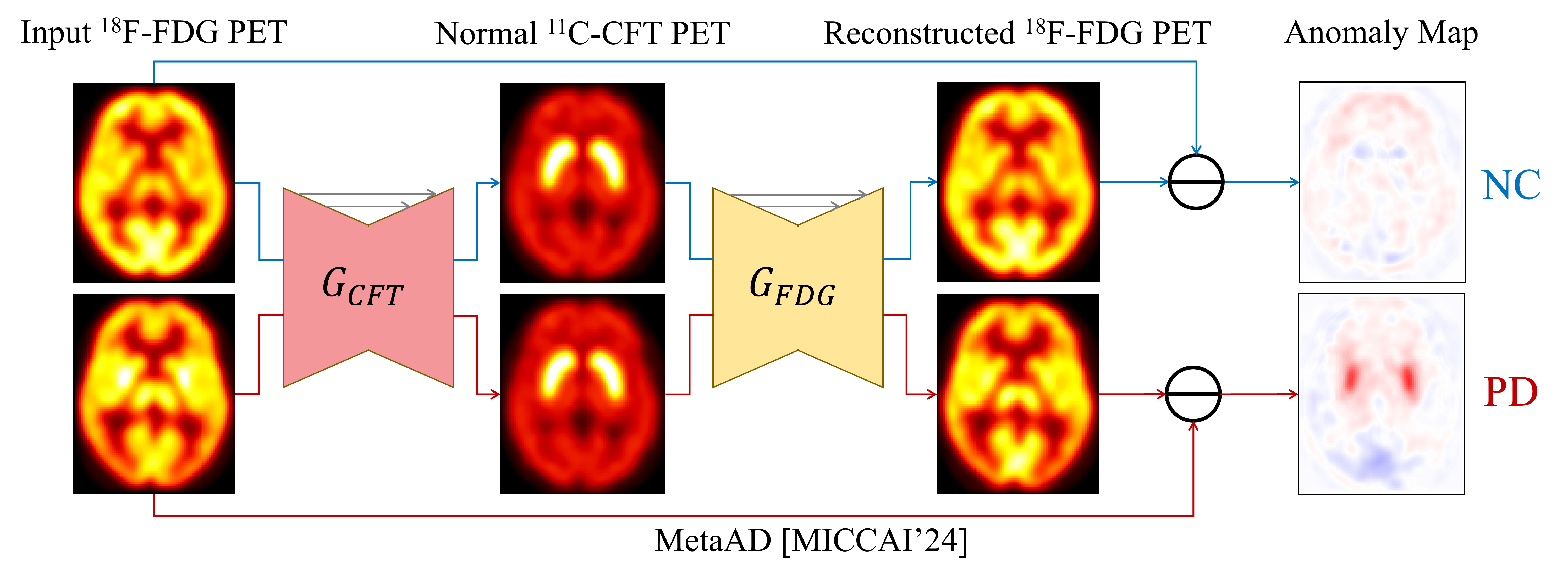

18F-FDG PET Anomaly Detection for Parkinson’s Disease (PD) Diagnosis

- We propose a Metabolism-aware Anomaly Detection (MetaAD) framework, which leverages a cyclic cross-modality image translation workflow to identify abnormal metabolism cues of PD in 18F-FDG PET scans.

Relevant Publications:

- MetaAD: Metabolism-Aware Anomaly Detection for Parkinson’s Disease in 3D 18F-FDG PET [MICCAI’24] (Young Scientist Award)

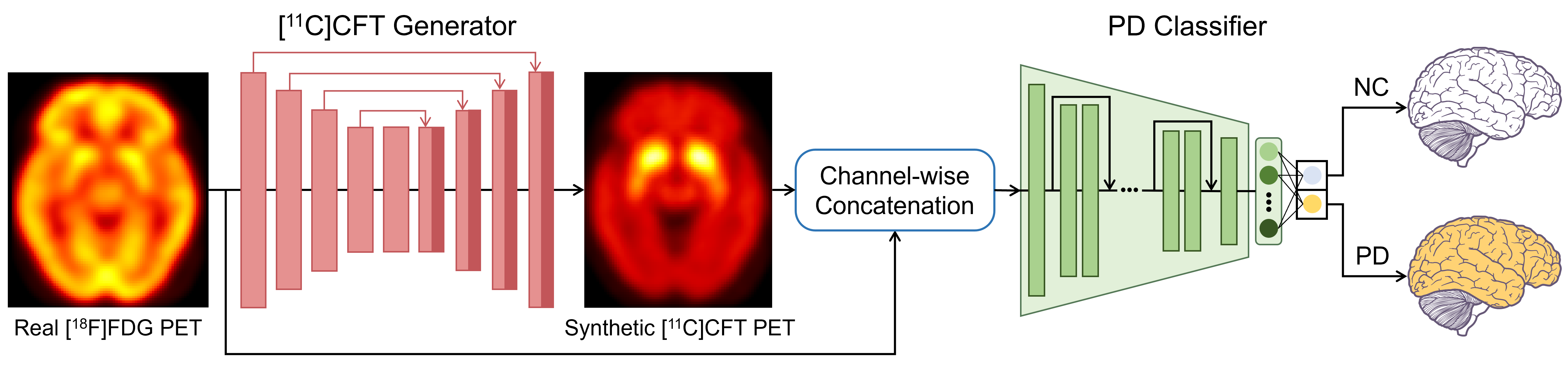

18F-FDG PET to 11C-CFT PET synthesis for Parkinson’s Disease (PD) Diagnosis

- We propose a two-stage framework that synthesizes 11C-CFT PET images from real 18F-FDG PET scans for automatic PD diagnosis, which was based on the correlation between dopaminergic deficiency in the striatum and increased glucose metabolism in PD patients.

Relevant Publications:

- Cross-Modality PET Image Synthesis for Parkinson’s Disease Diagnosis: A Leap from [18F]FDG to [11C]CFT [EJNMMI’25]

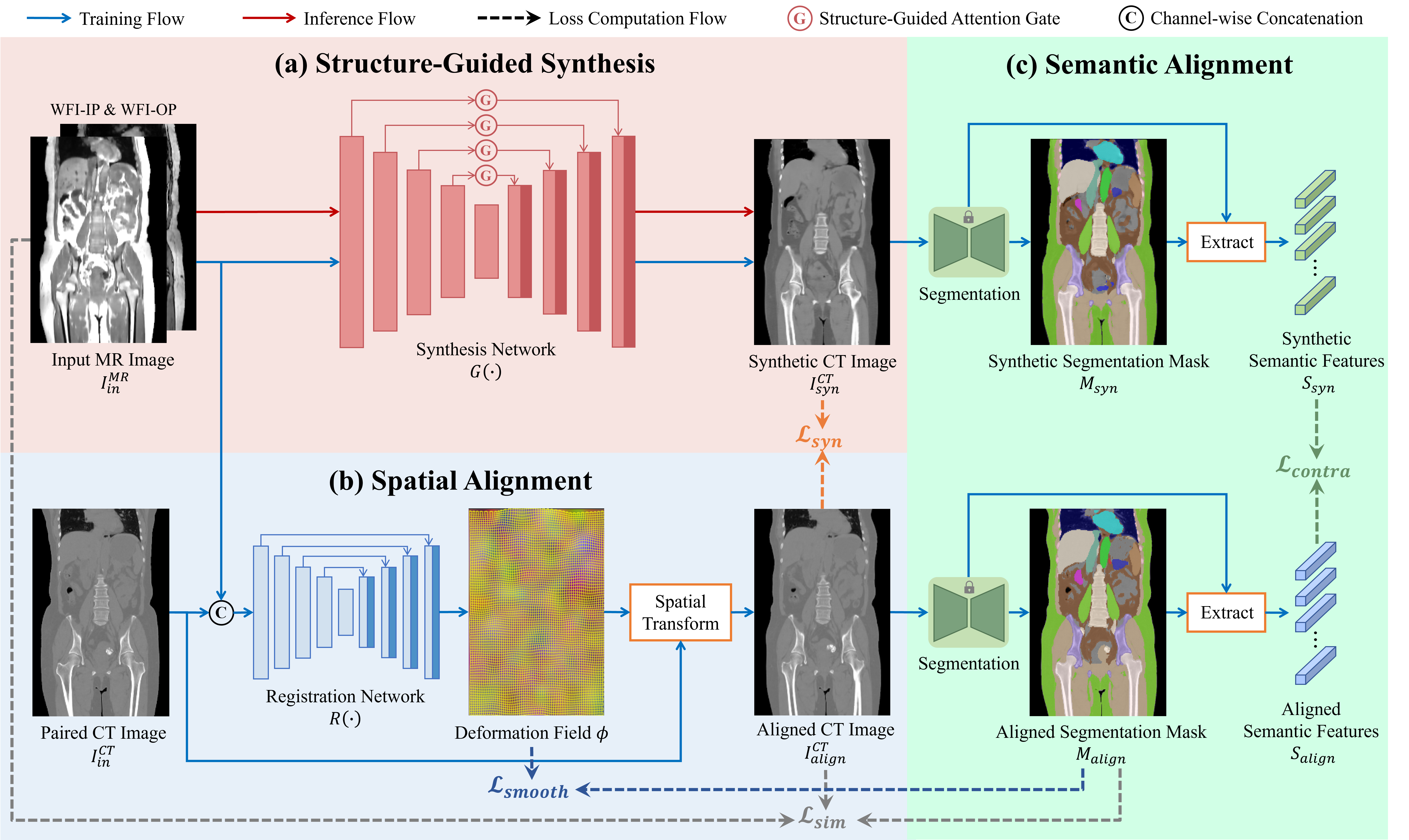

Whole-body MR-to-CT Synthesis for PET Attenuation Correction

- We propose a whole-body MR-to-CT synthesis framework that integrates structural guidance, spatial alignment, and semantic constraint to enhance synthetic CT image quality, thus facilitating PET attenuation correction in whole-body PET/MR imaging.

Relevant Publications:

- Structure-Guided MR-to-CT Synthesis with Spatial and Semantic Alignments for Attenuation Correction of Whole-Body PET/MR Imaging [MedIA’25]

Medical Image Quality Enhancement

Low-quality medical images can potentially compromise diagnostic accuracy and clinical decision-making. We leverage image super-resolution or image restoration methods to improve image fidelity and structural details for medical image quality enhancement, which aims to increase the clinical utility and improve the reliability of diagnostic outcomes.

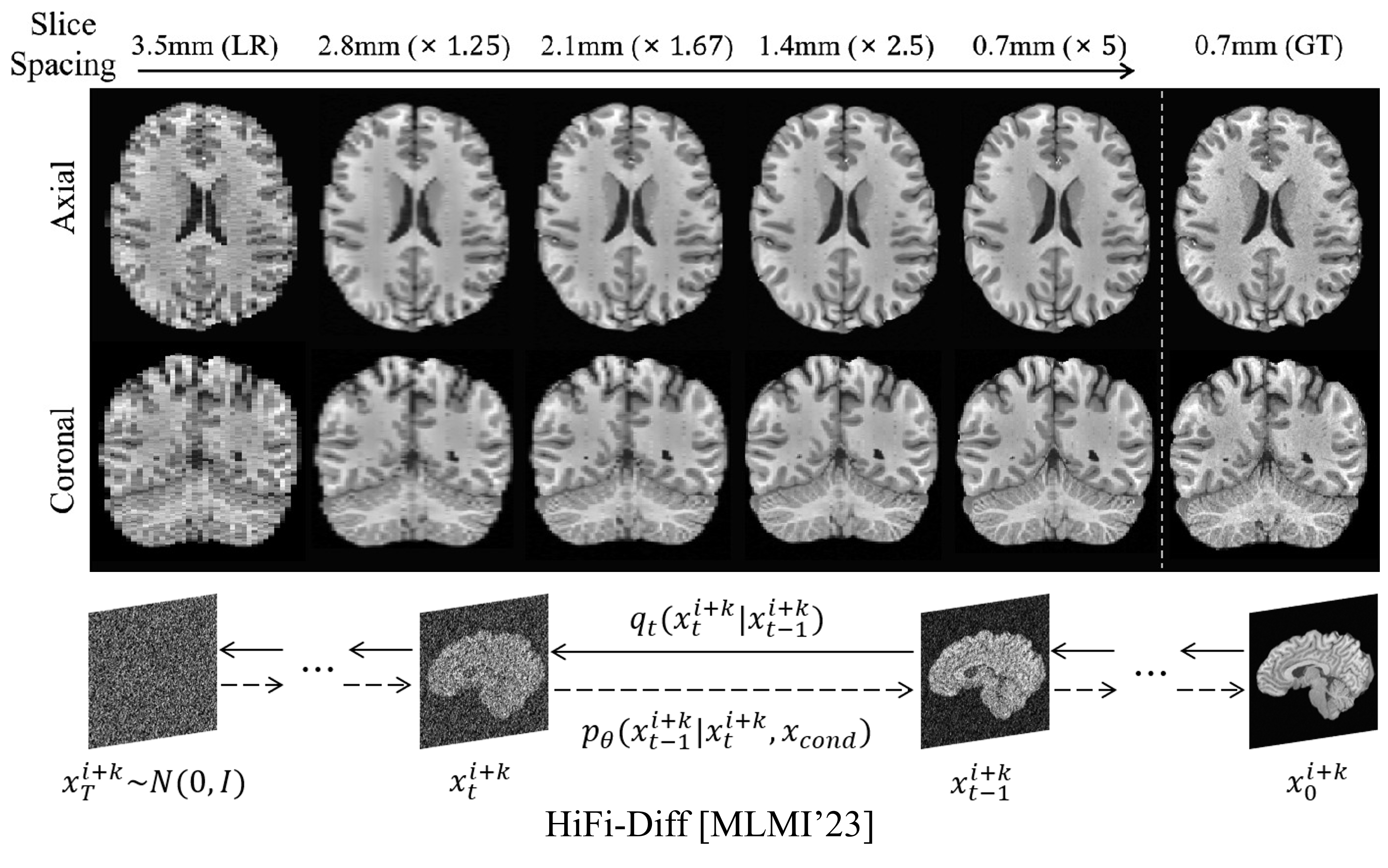

MRI Super-resolution for Arbitrary Inter-Slice Spacing Reduction

- We proposed Hierarchical Feature Conditional Diffusion (HiFi-Diff) for arbitrary MRI inter-slice spacing reduction, which generates any desired in-between MR slice from adjacent MR slices in the through-plane direction.

Relevant Publications:

- Arbitrary Reduction of MRI Inter-slice Spacing Using Hierarchical Feature Conditional Diffusion [MLMI’23]